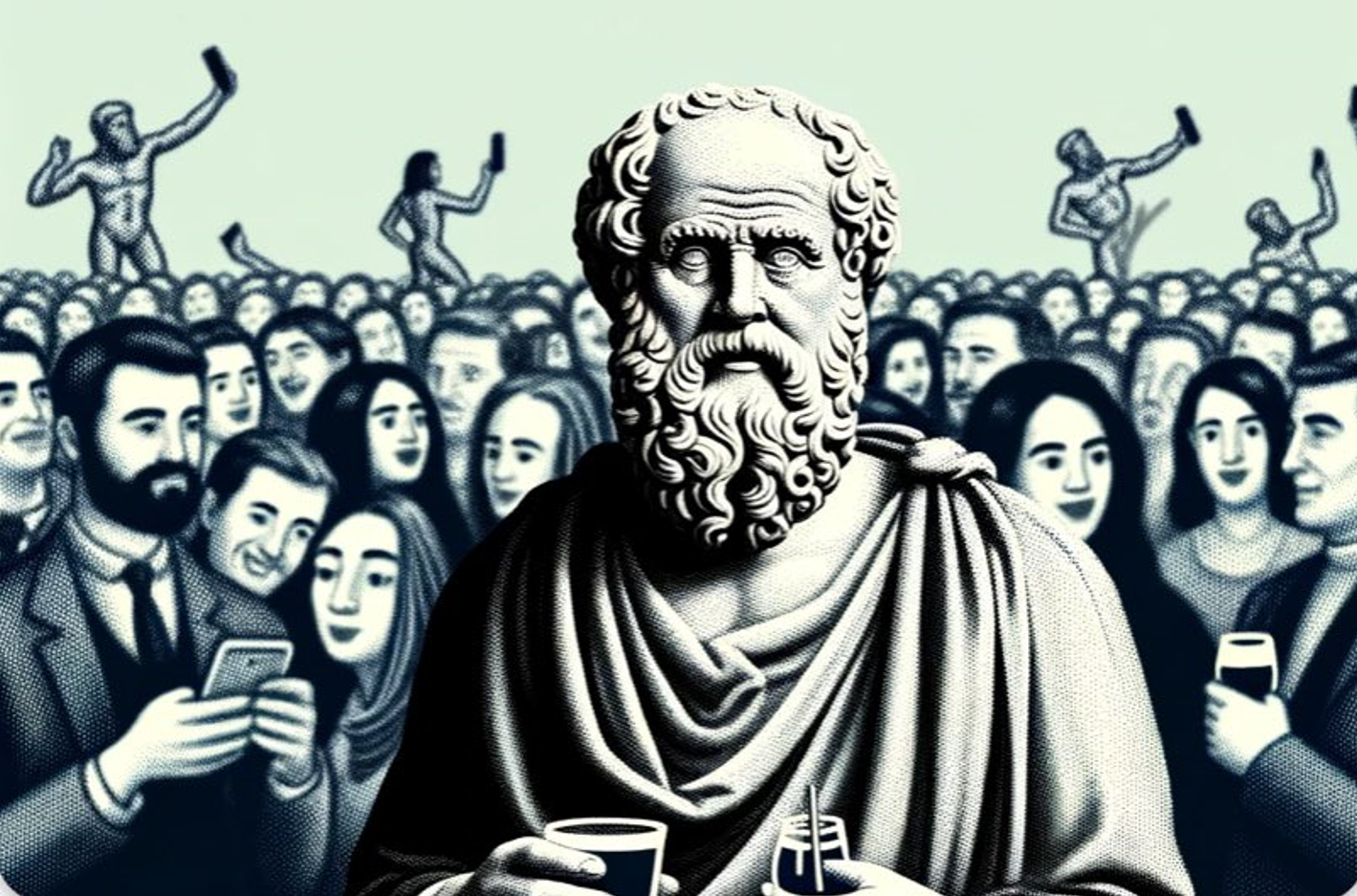

Can’t Stop Partying: Hedonism, Love, and the Enshittification of Everything (TTCB XV)

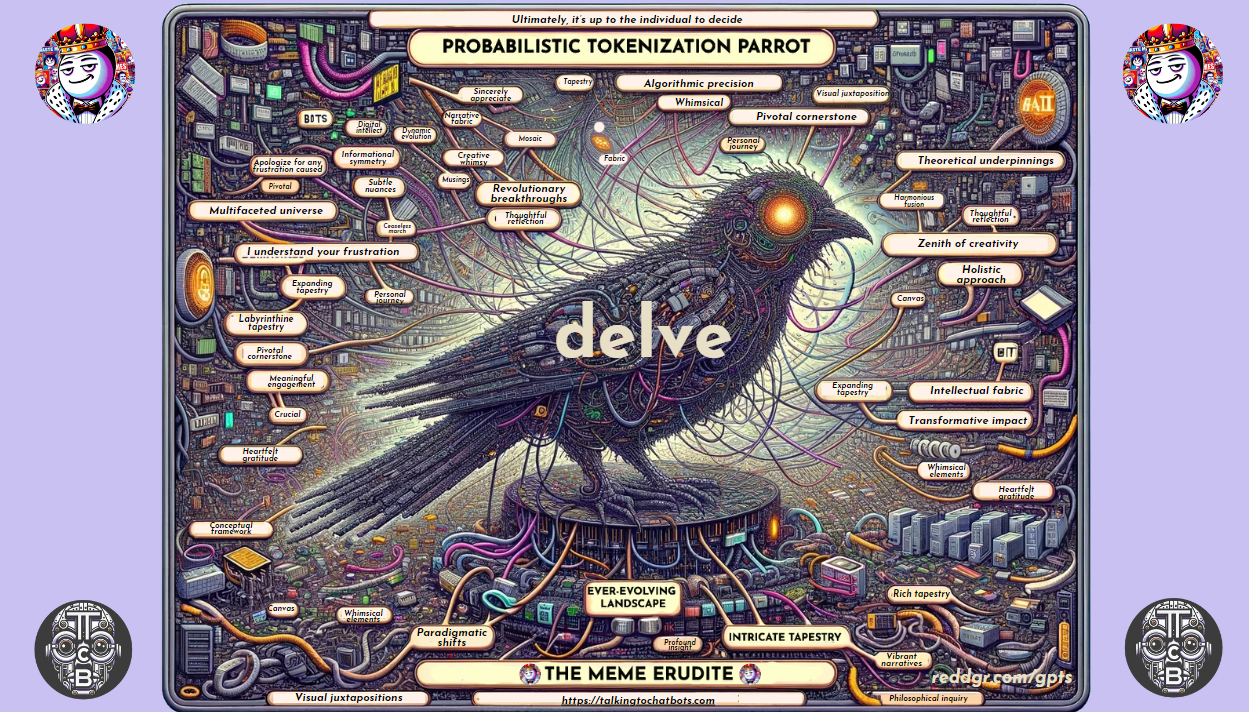

We talk so much about ‘papers’ and the presumed authenticity of their content when we read through academic research, in all fields, but when touching on machine learning in particular. Ironically, the process that created the medium for scientific research diffusion in the physical paper era was not that different from the process that produces the ‘content’ in the era governed by machine learning algorithms, whether these are classifiers, search engines, or generative algorithms: ‘shattering’ texts by tokenizing them and creating embeddings, decomposing pieces of visual art into numeric ‘tensors’ a deep artificial neural network will later ‘diffuse’ into images that attract clicks for this cringey blog…