Why Do LLMs Like ‘to Delve’? The Search Engine Battle

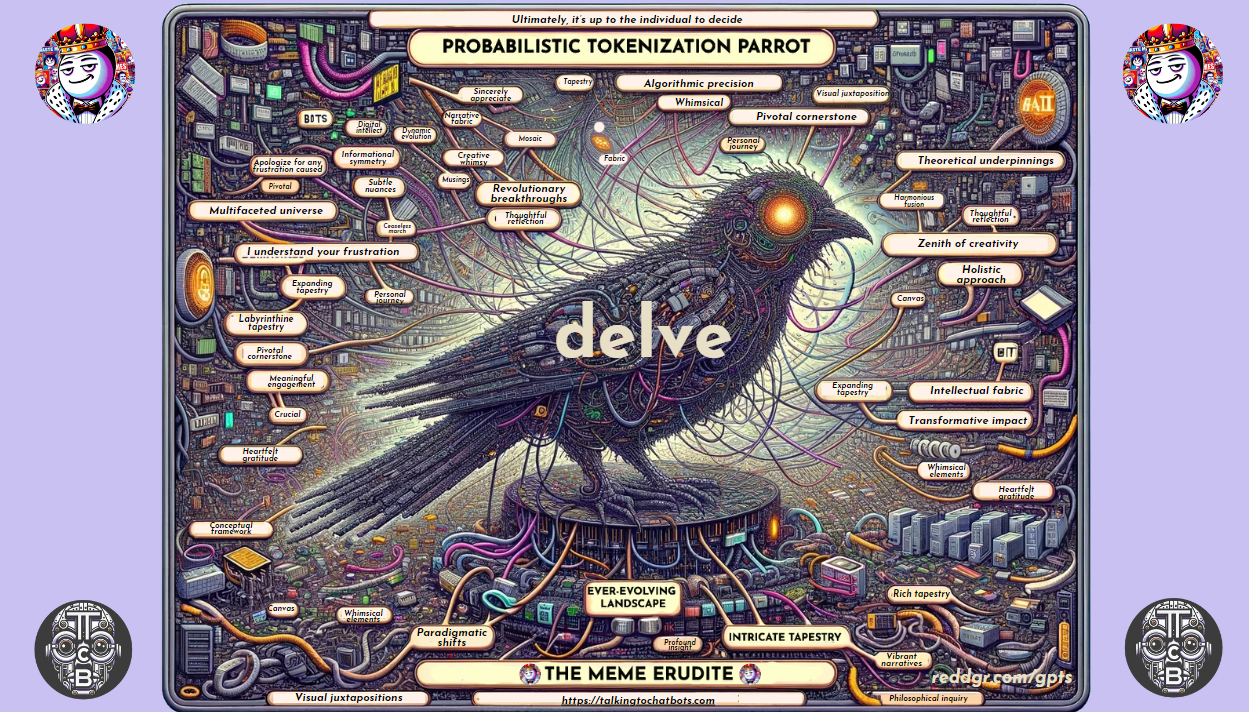

Searching for information is the quintessential misconception about LLMs being helpful or improving other existing technology. In my opinion, web search is a more effective way to find information, simply because search engines give the user what they want faster, and in a format that fits the purpose much better than a chatting tool: multiple sources to scroll through in a purpose-built user interface, including filter options, configuration settings, listed elements, excerpts, tabulated results, whatever you get in that particular web search tool… not the “I’m here to assist you, let’s delve into the intricacies of the ever-evolving landscape of X…” followed by a long perfectly composed paragraph based on a probabilistic model you would typically get when you send a prompt to ChatGPT asking for factual information about a topic named ‘X’.