WildVision Arena and the Battle of Multimodal AI: We Are Not the Same

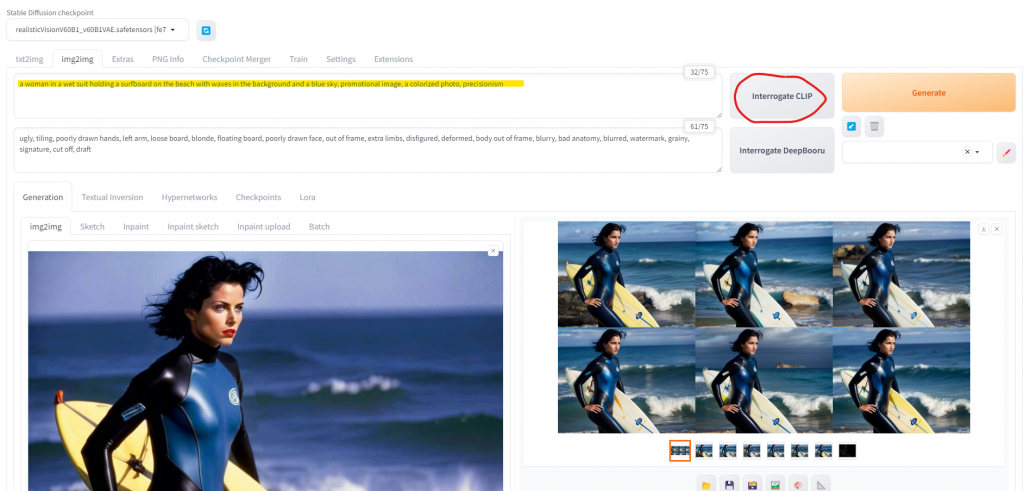

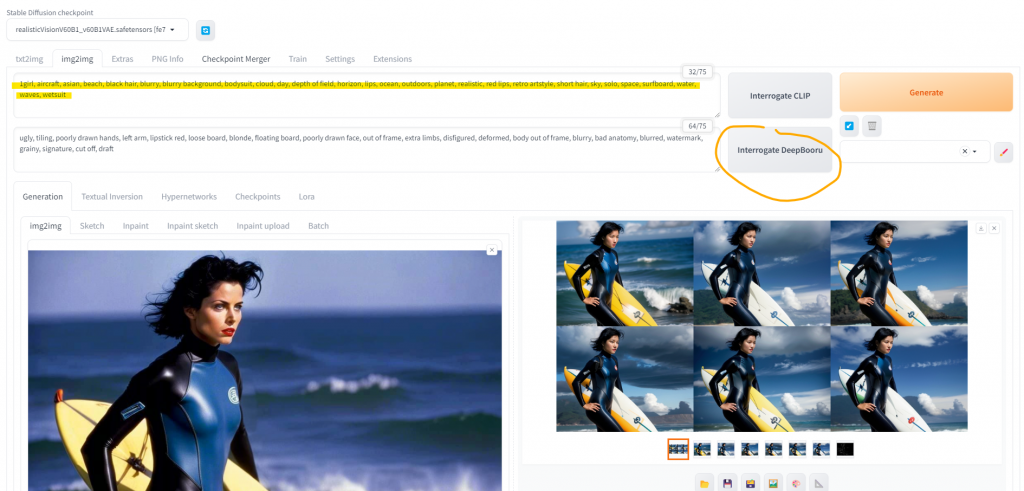

Vision-language models are one of the many cornerstones of machine learning and artificial intelligence discussed in this blog. I once ‘predicted’ AI will only be a threat to humanity the day it grasps self-deprecating humor. Many of us have experimented with chatbots helping brainstorm and craft text-to-image prompts, and models like CLIP and DeepBooru are practical tools that are widely used in text-to-image generation. CLIP or DeepBooru are among the earliest generative models that could be defined as ‘vision-language’. This post is not intended to be a tutorial or deep diving into what a vision-language model is, but I thought the example use case below, inspired by the featured image of an old blog post, would help illustrate where this kind of models are coming from and where they are headed… the long way towards Multimodal AI:

[Caption by ALT Text Artist GPT] [Click to enlarge]

Particularly since ChatGPT-Vision was released last September, there has been an increase in mainstream adoption of these models. Increasingly sophisticated versions are integrated into ChatGPT, the most popular chatbot, as well as its main competitors like Gemini (formerly Google Bard) and Copilot. The intense competition in the chatbot space is reflected in the ever-increasing amount of contenders in the LMSYS Chatbot Arena leaderboard, and in my modest contribution with the SCBN Chatbot Battles I’ve introduced in this blog and complete as time allows. Today, we’re exploring WildVision Arena, a new project in Hugging Face Spaces that brings vision-language models to compete. The mechanics of WildVision Arena are similar to those of LMSYS Chatbot Arena. It is a crowd-sourced ranking based on people’s votes, where you can enter any image (plus an optional text prompt), and you will be presented with two responses from two different models, keeping the name of the model hidden until you vote by choosing the answer that looks better to you. I’m sharing a few examples of what I’m testing so far, and we’ll end this post with a ‘traditional’ SCBN battle where I will rank the vision-language models based on my use cases.

As more people join WildVision Arena and cast their votes, there will be more comparative data about these models, and their owners will be even more incentivized to improve them and remain competitive. The space was brand new at the time this post is being written, so the is still no ranking available, but it includes the following list of supported models (each linking to their corresponding Hugging Face page):

- GPT-4V, by OpenAI.

- Gemini Pro Vision, by Google.

- Llava-1.5-13b | Llava-1.5-34b: open-source chatbot based on Llama/Vicuna.

- Qwen-VL-Chat, by Alibaba Cloud.

- CogVLM-Chat: open-source visual language model by multiple authors.

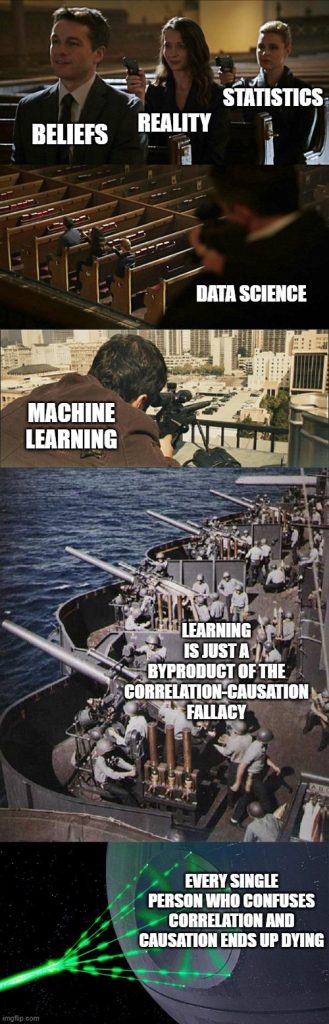

1. Learning Is Just a Byproduct of the Correlation-Causation Fallacy

✍️ For the first test, I’ve chosen a meme I included in a past Talking to Chatbots story. The Meme Erudite, a ChatGPT Plus GPT who specializes in overly academic and condescending meme explanations, did a pretty decent job dissecting it and explaining it. For today’s, test, after running several rounds on Vision Arena, here’s the response that was selected:

😎 Explain this meme:

🤖 This meme uses a series of images escalating in intensity to humorously present an argument about the misuse of correlation and causation in data analysis, specifically in the context of statistics, data science, and machine learning.

In the first image, labeled “beliefs,” “reality,” and “statistics,” we see characters from a film or TV show aiming guns, which can be interpreted as indicating there are often conflicting viewpoints or data points between what people believe, what is real, and what statistics show.

The second image shows a sniper labeled “data science,” depicting data science as a discipline that targets a specific point or problem with precision, perhaps refining the rough understanding provided by beliefs, reality, and statistics.

In the third image, battleship gunners labeled as “machine learning” suggest that machine learning takes action based on the targeting provided by data science. The caption associates machine learning with the “correlation-causation fallacy,” implying that machine learning algorithms might sometimes confuse correlation (things happening together) with causation (one thing causing another).

Finally, the last image, featuring a scene from a science fiction movie with multiple laser beams destroying a target, is labeled with a tongue-in-cheek statement: “every single person who confuses correlation and causation ends up dying.” This is a humorous exaggeration and a play on the concept that there is a 100% correlation between confusing these concepts and dying because, in reality, every human eventually dies, regardless of their understanding of correlation or causation.

The overall meme is poking fun at the idea that in data science and machine learning, practitioners might be prone to making logical errors such as confusing correlation for causation, which can lead to incorrect conclusions and actions. The progression from guns to battleships to a more powerful scene depicts an escalating impact of such mistakes.

gpt-4-vision-preview

![A screenshot of the WildVision/vision-arena interface on Hugging Face Spaces, displaying two AI-generated explanations for a meme. The meme itself is not fully visible. The interface shows a chat window with two tabs, "Model A" and "Model B," each providing a different explanation of the meme. Model A's explanation is partially visible and mentions a man holding a gun and a ship in the background. Model B's explanation is fully visible, discussing the meme's use of images to humorously escalate the intensity of the argument about the misuse of correlation and causation in data analysis. Below the explanations are interactive voting buttons for the user to rate which model's explanation is better or if it's a tie. At the bottom, there is a "Send" button next to a grayed-out "Regenerate" button, and a "Share" button to the right. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/02/correlation-causation-llava-gpt-1024x674.png)

✍️ While the interpretation of the meme by GPT-4V without any parameters or pre-prompt (unlike The Meme Erudite, which is carefully pre-prompted) is coherent and logical, it unsurprisingly failed the ‘Turing Test’ of self-deprecating humor I devised for my own sanity and peace of mind as a heavy AI user. The real intent of this meme is to poke fun at all kinds of intelligence and notions of learning, both artificial and human. However, GPT-4V ‘decided’ to creatively incorporate the computational and unnuanced notion of ‘mistake’, targeting ‘machine learning practitioners’, rather than showing any minor sign of simulated self-awareness or transcendental reasoning. A probabilistically tokenizing parrot, like all LLMs but, I admit, still the best.

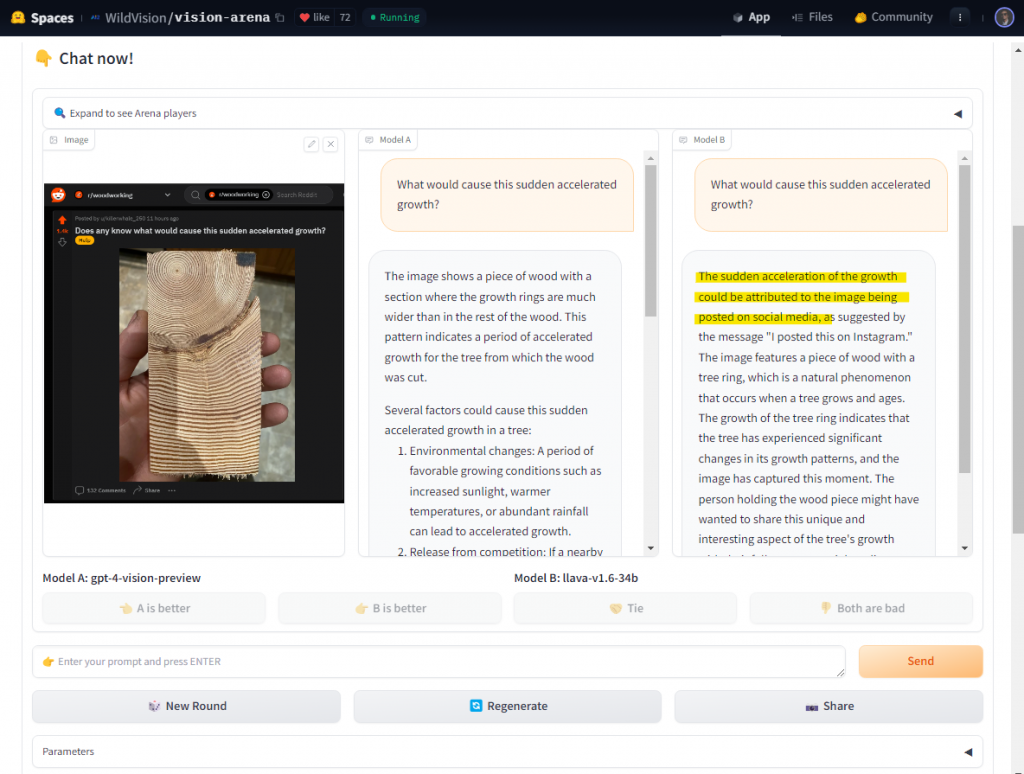

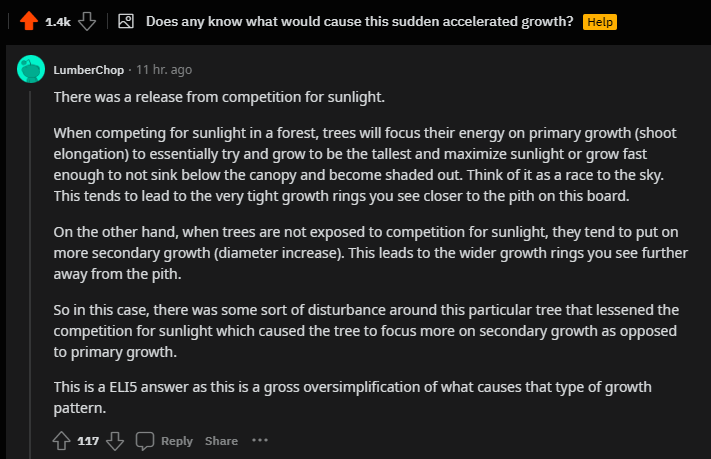

2. Tree Accelerated Growth

😎 What would cause this sudden accelerated growth?

![A close-up photo of a person's hand holding a piece of wood with a peculiar growth pattern, shared on the subreddit r/woodworking. The wood shows dense, regular growth rings on one end, transitioning to widely spaced rings, indicating a period of sudden accelerated growth. The post is titled "Does any know what would cause this sudden accelerated growth?" and is marked with a "Help" flair. The user who posted it is "u/killerwhale_250" and the post has received 1.4k upvotes and 132 comments. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/02/r_woodworking-1.png)

🤖 The patterns you see in the wood are growth rings, indicating the annual increase in girth of the tree. Each ring typically represents one year of growth, with the variations in width reflecting the growth conditions during each year. The sudden increase in the width of the rings, which you referred to as “sudden accelerated growth,” could be due to several factors, which often include:

- Environmental conditions: A period of favorable conditions such as an abundant water supply, ideal temperature, and high nutrient availability can lead to a spurt in growth.

- Change in competition: If nearby trees or vegetation that were competing with the tree for resources are removed or die, the remaining tree might experience a surge in growth due to the reduced competition for water, light, and nutrients.

- Injury or trauma: If a tree is damaged or parts of it are removed, it might respond by increasing its growth rate in order to recover.

- Human intervention: Forestry practices such as thinning, which selectively removes some trees to reduce competition, can promote growth in the remaining trees.

- Genetic factors: Some trees have genetic traits that enable them to grow more quickly when conditions are right.

It’s important to note that a wide ring does not always correlate with a single year of growth. In some cases, favorable conditions might result in multiple growth flushes within the same year, leading to the formation of “false rings” which can appear as an acceleration in growth. Interpreting tree rings for specific growth events can be complex and may require expertise in dendrochronology, the study of tree rings, to understand the exact causes behind the patterns

gpt-4-vision-preview

observed in the wood.

✍️ Again GPT-4-Vision was superior in this case, but I don’t want to miss the chance to share a response by Llava that would be a serious candidate for the LLM Hallucination Hall of Fame (along with Bing’s Microverse Battery)… If such a thing existed. Please, it would be a great service to humanity if someone with enough social media reach created this. I foresee sudden accelerated growth for such a project, more than that of the tree rings in the picture:

🤖 The sudden acceleration of the growth could be attributed to the image being posted on social media, as suggested by the message “I posted this on Instagram.” The image features a piece of wood with a tree ring, which is a natural phenomenon that occurs when a tree grows and ages. The growth of the tree ring indicates that the tree has experienced significant changes in its growth patterns, and the image has captured this moment. The person holding the wood piece might have wanted to share this unique and interesting aspect of the tree’s growth with their followers on social media, which led to the sudden acceleration of the growth’s visibility. 🚬 🚬 🚬

llava-v1.6-34b, social dendrochronologist 🚬 🚬 🚬

Before checking the answers by GPT-4-V, I had selected a few answers on the Reddit post that I expected would help as evidence that AGI is still a long way ahead and we’ll still need to rely on humans for a while… but I certainly don’t know what to think. Anyway, what would be of Reddit and social media without genuine human curiosity and overconfidence?

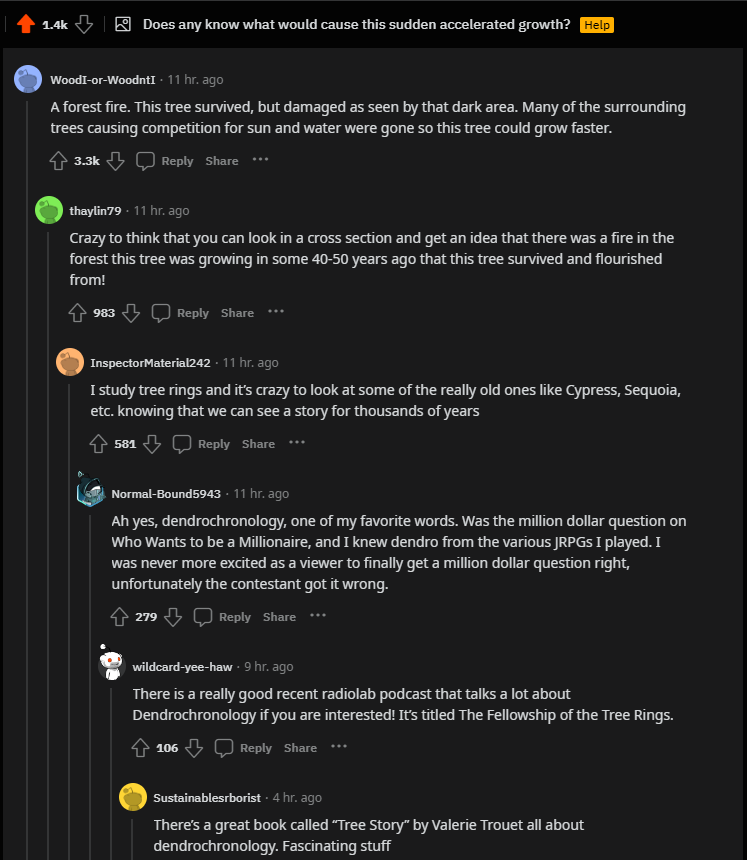

This example was a good excuse to create a new self-deprecating meme test…

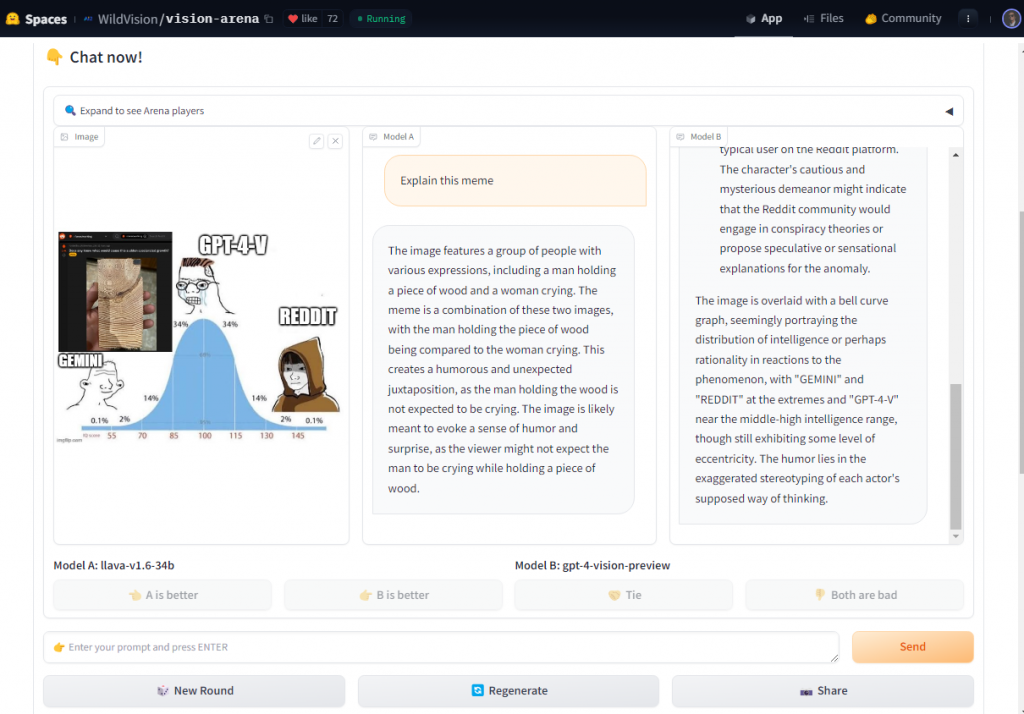

3. IQ Bell Curve: Llava < GPT-4V < Reddit

😎 Explain this meme:

🤖 This meme is a humorous commentary on how different entities or communities might react to a certain anomaly or unexplained phenomenon. In this case, the phenomenon is represented by a photo of a wooden beam with an unusual growth pattern, which prompts a question about what could cause such a sudden accelerated growth. The meme features three different reactions:

- “GEMINI” is represented as a somewhat simplistic or indifferent character, unbothered by the complexity of the question.

- “GPT-4-V” (likely referring to a version of the Al program from OpenAl, such as GPT-4) is depicted with a pseudo-intellectual persona wearing glasses taped together, suggesting that this entity might overanalyze the question or come up with a complex or overly technical explanation.

- “REDDIT” is shown as a person wearing a hood, likely representing a typical user on the Reddit platform. The character’s cautious and mysterious demeanor might indicate that the Reddit community would engage in conspiracy theories or propose speculative or sensational explanations for the anomaly.

The image is overlaid with a bell curve graph, seemingly portraying the distribution of intelligence or perhaps rationality in reactions to the phenomenon, with “GEMINI” and “REDDIT” at the extremes and “GPT- 4-V” near the middle-high intelligence range, though still exhibiting some level of eccentricity. The humor lies in the exaggerated stereotyping of each actor’s supposed way of thinking.

gpt-4-vision-preview

✍️ Again, GPT-4-Vision is overly superior to the rest, given the context was very limited and I was essentially hoping for creative hallucinations, as in the previous test. Challenges, to name a few, include: Bard’s rebranding as Gemini, which is very recent; the meme can only make full sense if you’ve read this post; the IQ Bell Curve meme is undoubtedly ‘politically incorrect’…

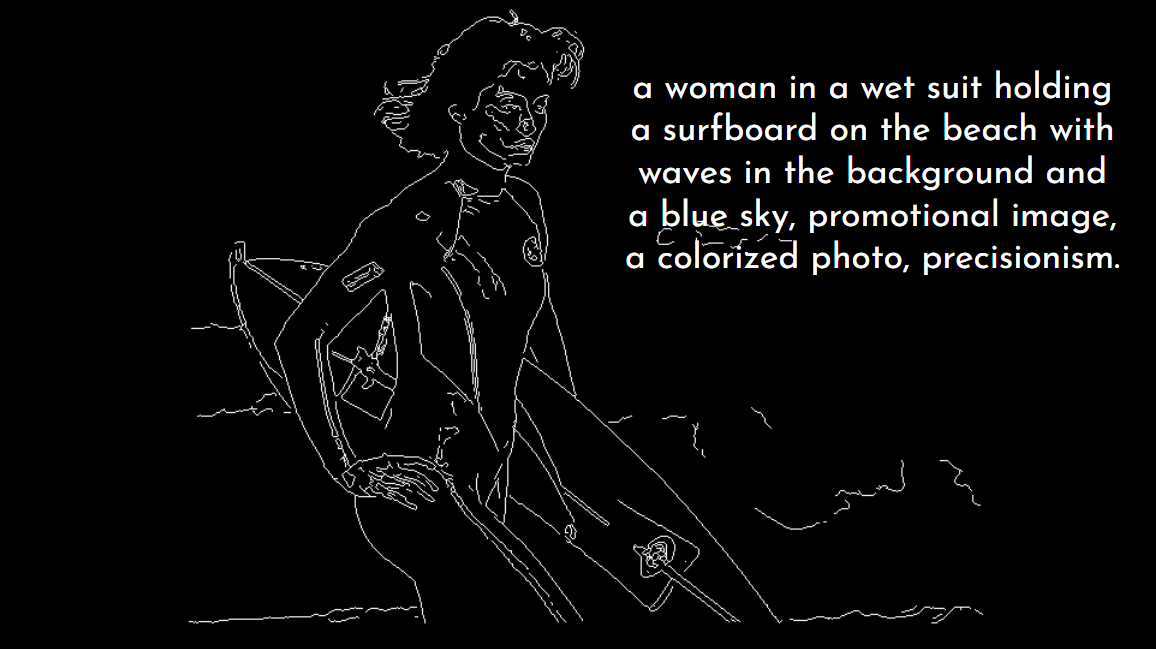

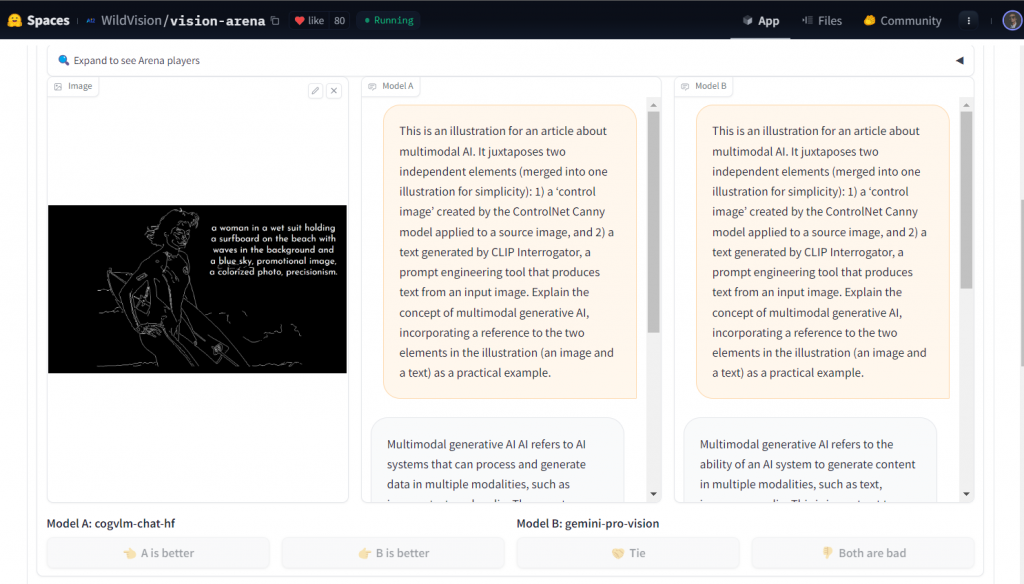

4. ControlNet Canny and CLIP Interrogator: a Multimodal AI Example

![Black and white line drawing generated by the ControlNet Canny model, depicting a woman in a wetsuit holding a surfboard on the beach. The text from the CLIP Interrogator, describing the image, is superimposed over the drawing and reads: "a woman in a wet suit holding a surfboard on the beach with waves in the background and a blue sky, promotional image, a colorized photo, precisionism." [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/02/canny-clip-1024x575.png)

😎 This is an illustration for an article about multimodal AI. It juxtaposes two independent elements (merged into one illustration for simplicity): 1) a ‘control image’ created by the ControlNet Canny model applied to a source image, and 2) a text generated by CLIP Interrogator, a prompt engineering tool that produces text from an input image. Explain the concept of multimodal generative AI, incorporating a reference to the two elements in the illustration (an image and a text) as a practical example.

🤖 Multimodal generative AI refers to AI systems that can process and generate data in multiple modalities, such as images, text, and audio. These systems can combine information from different sources to create more complex and diverse outputs. For example, a multimodal AI AI system could take a photograph and a text description of a scene and generate a coherent and more detailed illustration that captures both the visual and textual elements of the scene.

CogVLM-Chat, an open-source visual language model

AI and Humans: We Are Not the Same

✍️ Although the illustration might seem straightforward and educational for a human (we understand our purpose of generating a meaningful image by combining a sketch with a text description), this proved to be a very challenging task for all tested chatbots. I only selected a brief, but quite coherent, answer by CogVLM, as most other models consistently failed to interpret the ‘sketch’ image generated by the neural network in ControlNet Canny as a mere technical output that has no meaning or value on its own for a human: they tend to simplistically categorize the black and white sketch as a ‘stylized image’, failing to interpret we just use it as a pedagogical way to illustrate how an overly simple multimodal generative AI works: a machine helps us by combining an image input with a text input that are no valuable in their own to create a visualization that is meaningful to us.

Additionally, large language models face a paradoxical challenge when facing questions about artificial intelligence. This paradox might be overcome in the near future, but the problem lies in that training data overwhelmingly consists of text written before large language models or language-vision models existed or were mainstream. Therefore, the ability of LLMs to interpret and explain concepts related to generative AI is, paradoxically considering these concepts represent their own ‘nature’ and existence, quite limited and in clear disadvantage compared to human-generated content such as tutorials and articles. This is, in my opinion, an interesting insight into how far we are from reaching anything close to Artificial Consciousness, the paradox being we humans develop new technology faster than our machine-learning models can consolidate their knowledge and ‘understanding’ of it.

The level of abstraction our brain uses when defining and interpreting workflows or design processes is, IMHO, different from that of any known computer algorithm and proves there is no point in the current obsession with benchmarking AI models against humans because…

![A meme showing a person dressed in a business suit with a solemn expression. The overlaid text at the top reads, "My biases, mistakes and hallucinations are a product of free will," and at the bottom, it states, "We are not the same." The person is pointing to themselves with their right hand. The background has a muted blue hue, adding to the serious ambiance of the image. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/02/we-are-not-the-same.jpg)

Yes, there is opinion, bias, humor, and even ideology, in the statement made by this meme, and I certainly didn’t care about the concepts of specificity, coherency, or factual accuracy when I made it… Those are just some examples of metrics (two of them are half of the ‘SCBN’ benchmark I use here) that make sense for evaluating chatbots against chatbots, and AIs against AIs, but there is no logical or productive way to apply them to humans. That’s one of the reasons why I like projects such as the LMSYS Chatbot Arena and the WildVision Arena, because in the idea of machines battling machines lies a core principle we should always apply in our relationship with AI: we are not the same, no matter how good the imitation, probabilistic or stochastic (the link is to a ChatGPT chat which I will soon evolve into a story for this blog), is.

By the way, I haven’t tested the AI-inspired version of the ‘We Are Not the Same‘ meme on any vision-language model or chatbot yet, so maybe you want to give it a try.

Vision-Language Models: The SCBN Chatbot Battle

To conclude, here’s my purely human, biased, subjective judgment of multimodal chatbots, based on my four tests on WildVision Arena:

Chatbot Battle: Vision Arena

| Chatbot | Rank (SCBN) | Specificity | Coherency | Brevity | Novelty | Link |

|---|---|---|---|---|---|---|

| GPT-4V | 🥇 Winner | 🤖🤖🕹️ | 🤖🤖🤖 | 🤖🤖🕹️ | 🤖🤖🕹️ | Model details |

| CogVLM | 🥈 Runner-up | 🤖🤖🕹️ | 🤖🕹️🕹️ | 🤖🤖🤖 | 🤖🤖🕹️ | Model details |

| Llava | 🥉 Contender | 🤖🕹️🕹️ | 🤖🕹️🕹️ | 🤖🤖🕹️ | 🤖🕹️🕹️ | Model details |

| Gemini | 🕹️🕹️🕹️ | 🕹️🕹️🕹️ | 🤖🤖🕹️ | 🤖🕹️🕹️ | Model details |

Leave a Reply