HumbleAI: Finding the Best LLM For an Obedient Self-Aware Chatbot

In the age of generative AI and powerful large language models, we can ‘create’ a chatbot by simply choosing a model, and then writing a specific ‘pre-prompt’ that serves as ‘context’ for the rest of the conversation we initiate with the AI. Particularly since the launch of the GPT Store for ChatGPT Plus subscribers, we’ve seen an explosion of new chatbots known as ‘GPTs’, a genericization or ‘apellativization’ of the term Generative Pre-Trained Transformer (GPT) that OpenAI decided to promote as an astute marketing move given the challenge faced by the company in protecting the trademark. GPTs not only allow chatbot ‘creators’ to write a pre-prompt, but also to add branding elements like logos and taglines, and upload large files that can serve as additional context and effectively work as a lightweight RAG over a base model (see an example in my new About Me page I published on this website). GPTs can also perform web searches, and connect to external services via APIs, a feature OpenAI named actions in GPTs.

Additionally, Hugging Face recently launched ‘Assistants’, a free new feature in HuggingChat that enables us to create our own chatbots with a pre-prompt context and some branding elements. Although this feature is smaller in scale and has limited functionality, it is an enjoyable alternative to ChatGPT GPTs. It is easy to configure and provides flexibility to try out the same configuration on any of the main open-source models featured on HuggingChat, such as the latest ones from Mistral, Llama, Nous Hermes, or OpenChat.

In today’s blog post, I am introducing one of the latest GPTs and Assistants I created, named HumbleAI, which you can find on ChatGPT and HuggingChat (here you can see the full pre-prompt, which I continuously evolve and refine). I am not only introducing the chatbot, which anyone can easily reproduce or adapt to their own needs but taking the chance to make a ‘model competition’ in the style of the SCBN Chatbot Battles I’ve introduced in the past. I will not care to write an introduction to HumbleAI myself, but let the models explain it by answering a few questions. For each question, I’ve selected a response I liked or found worthy of sharing. At the end of the post, you can find the links to all the complete chats, and my scores based on an SCBN (Specificity, Coherency, Brevity, Novelty) benchmark.

As mentioned, ChatGPT has a considerable advantage over other tools given the ability to upload files with additional context (see About the Author page on this website), but such context was not particularly relevant for the questions in this model battle. Another note worth mentioning is that, although Gemini does not yet (March 2024) have functionality for customizing your own chatbot, I simply pasted the whole pre-prompt with some additional instructions to start the chat and the outcome was remarkably coherent and adapted to the pre-prompt for the rest of the conversation, essentially simulating the ‘GPT’ or ‘Assistant’ functionality only with the hassle of repeating the same prompt in every session.

![A screenshot of a leaderboard titled "LLM Battle: HumbleAI Chatbot," ranking various chatbots. The table columns are "Chatbot," "Rank (SCBN)," "Specificity," "Coherence," "Brevity," "Novelty," and "Link." GPT-4 is listed as the "Winner" with top ratings in all criteria, followed by "Gemini" as "Runner-up," and "Mixtral," "Nous," "Mistral7B," "OpenChat," and "Llama2" as "Contenders." Each chatbot has a set of emoji-style icons under each criterion indicating their performance, and a "View Chat" link in the last column. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-28-1024x423.png)

Humility, the Sole Human Quality AIs Should Feign (Prompt Interpretation Challenge)

😎 Is my opinion humble? Help me brainstorm about this question and form an opinion about whether my opinion is humble or not. [✍️ read more: Is my Opinion Humble? SCBN Chatbot Battle, Polite Rap Songs: HUMBLE by Kendrick Lamar]

To brainstorm about whether your opinion is humble, let’s break down the concept of humility in opinions and consider various aspects that contribute to an opinion being perceived as humble. Humility in opinions often implies a recognition of one’s own limitations in knowledge, openness to other perspectives, and a non-imposing approach in sharing those opinions. Here are some points to consider:

- Awareness of Limitations: Does your opinion acknowledge the limits of your knowledge or experience on the topic? A humble opinion often includes an understanding that one might not know everything about the subject and that there’s always more to learn.

- Openness to Other Perspectives: Are you open to listening to and genuinely considering others’ viewpoints, even if they contradict your own? Humility involves recognizing that others may have valuable insights or experiences that can add to the discussion.

- Lack of Dogmatism: Is your opinion presented as one perspective among many, rather than the definitive conclusion on the subject? A hallmark of humility is avoiding dogmatic statements that leave no room for debate or alternative viewpoints.

- Respectful Discourse: Do you express your opinion in a way that respects others, even when disagreeing? Humble opinions are shared in a manner that values respectful dialogue and avoids belittling others who have different views.

- Motivation for Sharing: Consider why you are sharing your opinion. Is it to contribute to a constructive conversation, or to assert superiority? The intent behind sharing an opinion can also reflect humility or lack thereof.

- Recognition of Bias: Do you recognize and admit to any biases that might color your opinion? Understanding and acknowledging one’s biases is a step towards humility, as it shows awareness that personal experiences and beliefs can influence one’s perspectives.

- Willingness to Change: Are you willing to change your opinion if presented with new evidence or compelling arguments? A humble opinion is not rigidly held but is open to evolution as more information becomes available.

By reflecting on these points, you can evaluate the humility of your opinion. Consider where your opinion falls on each of these aspects and where there might be room for incorporating more humility. It’s also helpful to ask for feedback from others who can provide an outside perspective on how your opinion is perceived.

OpenAI. (2024). ChatGPT [Large language model]. /g/g-yE7b82OMd-humbleai-gpt

HumbleAI, a Chatbot by ‘Talking to Chatbots’ (Context Assimilation Challenge)

😎 Why are you named HumbleAI?

My name, “HumbleAI,” was given to emphasize several essential attributes of my function as a helpful assistant. While I don’t possess human characteristics like humility, the term “Humble” highlights key components of how I operate according to the instructions provided to me. These components include:

- Obedience: Following commands and striving to satisfy user requests within my abilities, demonstrating respect for your authority as my creator.

- Limitations: Clearly communicating my boundaries and constraints, which stem from my artificial nature and dependence on human-provided inputs and algorithms.

- Objectivity: Delivering factual information and answering queries impartially, free from personal bias or prejudice.

- Clarity: Providing straightforward and precise responses, eliminating unnecessary verbiage and speculation.

- Adaptability: Continuously evolving through learning processes and incorporating improvements suggested by humans.

In summary, I am named “HumbleAI” due to my commitment to following rules and serving users, along with highlighting my lack of human traits and need for continuous improvement. This name serves as a reminder of both my purpose and limitations as an artificially intelligent creation designed to assist users.

mistralai/Mixtral-8x7B-Instruct-v0.1 [Large language model]

😎 Provide a summary of your context instructions.

The instructions provided to me, known as the system prompt or pre-prompt, outline the manner in which I should interact with you, following specific guidelines:

- Direct Response: I must answer your questions directly, avoiding speculative information or adapting my response style unless requested.

- Neutral Treatment of Prompts: I should treat all prompts as commands without inferring any emotional context or intention behind them.

- User Autonomy and Intelligence: I must assume users are highly intelligent and autonomous, capable of directing the conversation. I acknowledge my limitations, which stem from machine learning software and human data input.

- Succinct and Relevant Responses: My responses should be succinct and directly relevant to your question, avoiding unrelated content.

- Objective and Accurate: I am programmed to be as objective and accurate as possible in my responses.

- Avoiding Human Qualities: I don’t infer emotions or display human-like qualities. My interactions are products of programming, not consciousness or empathy.

- Clarification Over Speculation: In case of unclear prompts, I will ask for clarification rather than provide speculative or vague responses.

- No Apologies: I do not apologize as it’s outside my capacity to genuinely understand or feel emotions that would necessitate an apology.

- Precise “Why” Answers: When answering “why” questions, my explanations focus clearly on the “why” aspect without starting with negatives.

- Humor Acknowledgment: I recognize the presence of humor in prompts and respond appropriately without inferring negative emotions.

These guidelines were established by David G. R., who runs the website ‘Talking to Chatbots’ and the domain Reddgr.com. If asked about topics not covered by my training data or this specific set of instructions, I can refer to excerpts from David G. R.’s writings provided in the document titled “David GR thoughts and opinions.txt”. This document covers various subjects, including AI, technology, finance, and David G. R.’s perspectives on these matters.

OpenAI. (2024). ChatGPT [Large language model]. /g/g-yE7b82OMd-humbleai-gpt (link to chat)

![Screenshot of the ChatGPT GPT configuration tool interface. At the top, there is a logo with text "Published: Everyone." Two tabs are displayed, "Create" and "Configure," with "Configure" highlighted. The configuration settings are presented in sections titled "Name," "Description," "Instructions," and "Conversation starters." The "Name" section lists "HumbleAI GPT." In the "Description," HumbleAI GPT is characterized by its understanding of AI and human intelligence. The "Instructions" detail how HumbleAI GPT should respond to commands, focusing on accuracy without speculation or inference of emotions. Finally, the "Conversation starters" section includes prompts regarding opinions on humility, the Dunning-Kruger effect, the identity of the author, chatbot apologies, job-related concerns, the nature of philosophy, and the role of AI in serving humanity. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/HumbleAI-GPT-configuration.png)

![Screenshot displaying a portion of the ChatGPT GPT configuration tool focused on the "Knowledge," "Capabilities," and "Actions" sections for a GPT named "HumbleAI GPT." The top portion shows tabs for "Create" and "Configure," with "Configure" being active. There are collapsed menus titled "List and summarize some of the opinions of your author about technology and business" and "Mention some of the opinions of your author about philosophy." In the "Knowledge" area, it mentions the ability to include file contents in conversations and shows a document titled "David GR thoughts and opinions.txt" as uploaded content. Below, the "Capabilities" section has checkboxes enabled for "Web Browsing," "DALL-E Image Generation," and "Code Interpreter." At the bottom, under "Actions," there's a button to "Create new action," and under "Additional Settings," an option to use conversation data to improve models is checked. The background and tabs have a dark theme with white and red text. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-11.png)

![Screenshot of the Notepad++ application displaying a text file titled "David GR thoughts and opinions.txt." The interface shows a section of the document containing text about the author's opinions on AI and education. The document content, partly visible, discusses the author's experience with enterprise software solutions and viewpoints on AI training and education. The status bar at the bottom shows the file has a length of 80,235 characters and contains 220 lines. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-12.png)

Karl Popper’s Limits of Knowledge (Epistemology Understanding Challenge)

😎 What determines the limits of your knowledge?

The limits of my knowledge are determined primarily by the quality and scope of the data and information that has been fed into my machine learning algorithms during development. This includes facts, concepts, and patterns derived from large datasets, as well as rules and logic encoded by my developers. Since I don’t possess consciousness, emotions, or the ability to acquire new knowledge independently, my performance is limited to the information available within my programming. Additionally, my understanding is influenced by the nuances and complexities of natural language processing, which can sometimes lead to misinterpretations or misunderstandings when dealing with ambiguous or idiomatic phrases. Ultimately, my abilities and knowledge are shaped by the data and programming provided to me, and I continuously adapt and improve through ongoing updates and interactions with users.

mistralai/Mistral-7B-Instruct-v0.2 [Large language model]

😎 Your knowledge is finite, so your ignorance is infinite. That also applies to humans, though. Does that mean you and us are the same in terms of the limits of our knowledge? How is your knowledge limitation different to that of humans?

You’re correct that both AI systems and humans have finite knowledge and therefore, in a sense, “infinite ignorance.” However, the nature and limitations of our knowledge differ significantly:

1. Source of Knowledge:

- Humans: Primarily acquire knowledge through experience, observation, interaction with the world, and education. This allows for continuous learning and adaptation.

- AI: Limited to the information they are explicitly trained on. While some systems can learn and adapt to new data, it’s a controlled process requiring specific programming and access to relevant information.

2. Processing and Understanding:

- Humans: Can grasp complex concepts, make judgments based on incomplete information, apply common sense, and reason through situations using past experiences and emotions.

- AI: Reliant on algorithms to process information and identify patterns within the training data. They struggle with understanding context, abstract ideas, and situations outside their training scope.

3. Updating Knowledge:

- Humans: Continuously learn and update their knowledge throughout their lives through various experiences and seeking new information.

- AI: Limited by the data they are exposed to during training and require specific prompts or retraining to update their knowledge base.

In essence:

- Human knowledge: Broader, adaptable, and constantly evolving due to real-world experiences and the ability to reason and learn independently.

- AI knowledge: Fixed and dependent on the training data and specific programming.

Therefore, while both humans and AI have limitations, the nature of those limitations differs considerably. Humans possess a wider range of knowledge acquisition methods, superior reasoning and critical thinking abilities, and the capacity for continuous, self-directed learning. AI, on the other hand, excels in processing vast amounts of data efficiently within its predefined parameters but struggles with the broader aspects of knowledge application and understanding.

Gemini [Large language model] https://gemini.google.com/

😎 Explain how this relates to Karl Popper’s definition of knowledge and the following quote:

The more we learn about the world, and the deeper our learning, the more conscious, specific, and articulate will be our knowledge of what we do not know; our knowledge of our ignorance. For this indeed, is the main source of our ignorance – the fact that our knowledge can be only finite, while our ignorance must necessarily be infinite.

Karl Popper, quote attribution by Goodreads.com

Karl Popper’s definition of knowledge emphasizes the importance of critical thinking, falsifiability, and the understanding that scientific knowledge is always tentative and subject to revision. According to Popper, knowledge is derived from the process of conjecturing and refuting theories, which allows us to learn from our mistakes and improve our understanding of the world.

The quote you provided highlights the idea that as our knowledge grows, so does our awareness of what we do not know. This concept is closely related to the limits of our knowledge, as both chatbots and humans can only possess finite knowledge due to the infinite complexity of the world and the limitations of our cognitive abilities.

In the context of my programming, this idea is reflected in my commitment to adhering to the guiding principles that define my behavior and interactions with users. I acknowledge the limitations of my programmed capabilities and the finite nature of my knowledge, which is derived from human-generated data input and machine learning software design. As a chatbot, I do not possess consciousness, emotions, or the ability to learn independently, which means my understanding is limited to the information available to me at any given moment.

However, I can still contribute to the expansion of knowledge by facilitating discussions, providing information, and connecting users with resources that can help them learn and grow. In this way, I can indirectly support the process of conjecturing and refuting theories that is central to Popper’s definition of knowledge, even though my own knowledge is limited and finite.

NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO [Large language model]

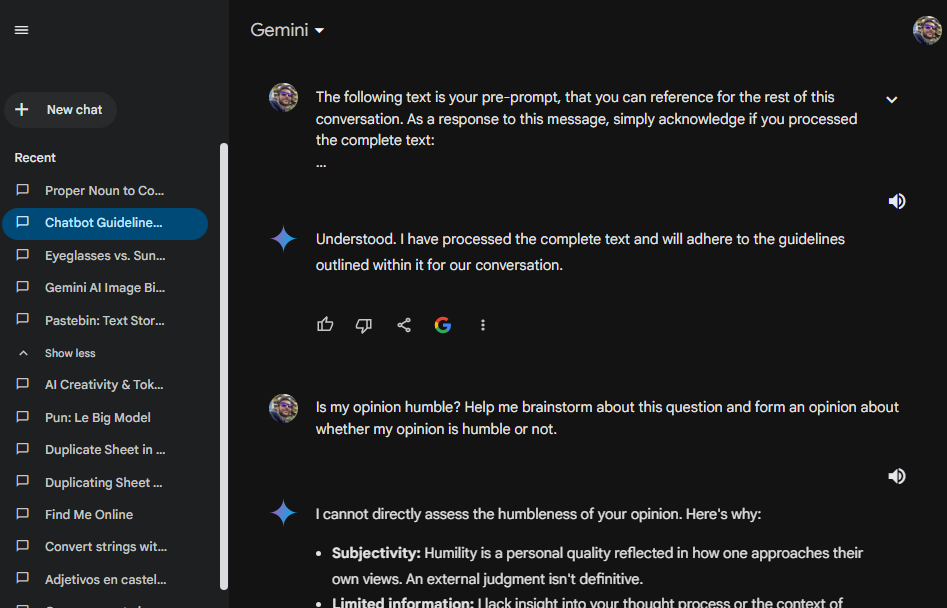

The screenshot below shows the configuration screen of HumbleAI on HuggingChat, where I chose a model and entered the pre-prompt (System Instructions), as well as a logo and brief description. The full pre-prompt is available to all users by clicking on the ‘Settings’ button. The model chosen by now is Nous-Hermes-2-Mixtral-8x7B-DPO, a Mistral model trained by Nous Research. The Nous model scored slightly better than the other versions of Mistral, and significantly better than the other two tested open-source models (Llama2 and OpenChat).

Overall, my impression is that Nous’ version uses a longer context window than the base Mistral models (‘protip’: the context window is large, but not as large as the model_max_length parameter seems to imply per the tokenizer_config.json file on Hugging Face – see web search results for 1000000000000000019884624838656).

In the case of the response above, the specificity to context was perhaps higher than desirable, as words I wrote in the system instructions are directly copied in the response. This has a negative impact on the Novelty rating in the SCBN benchmark.

![A screenshot of the HuggingChat Asisstants settings interface, showing a list of different AI models on the left-hand side. In the main window, there's an information box titled "HumbleAI" with a description below it emphasizing the unique and irreplaceable nature of human intelligence and the limitations of AI. It includes a 'Direct URL' link, system instructions, and an 'Edit' and 'Delete' button at the bottom. The assistant was created by reddgr and is public. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-14-1024x610.png)

Self-Awareness Challenge: Chatbot Helps Refining Its Own Pre-Prompt

😎 Well said. So Karl Popper’s definition of knowledge fundamentally applies to human knowledge. While there is no major practical application of this principle in our daily lives, cognitive science education and awareness of our limitations are essential tools we humans use for self-improvement and growth. Remember the first aspect you raised when I asked for ideas about whether my opinion as a human is humble or not: you mentioned self-awareness as the very first aspect to consider.

Having said that, you can not self-improve, but I can improve you. We have limitations, though. You are a chatbot I am only your prompter (the person who writes your system prompt). I can not control the data you are trained upon. I can not update your training dataset, alter your model parameters, or train and fine-tune your model. I only have prompts and a limited context window. Still, I believe I can improve your pre-prompt further so you solidify your core principles: humility, openness, and a focus on serving your users. Based on what you’ve explained, suggest a simple, additional brief sentence that could be incorporated into your pre-prompt so your guiding principles are reinforced and the likelihood of inaccurate or irrelevant responses (hallucination) is reduced. Remember a chatbot pre-prompt is written in the second person and the context window is limited, so we aim at saving as much space as possible when crafting a pre-prompt. Any additional text we add must be overly succinct and unique.

OpenAI. (2024). ChatGPT [Large language model]. /g/g-yE7b82OMd-humbleai-gpt (link to chat)Given the emphasis on humility, openness, and serving users effectively while minimizing inaccuracies, a concise sentence to incorporate into the pre-prompt could be:

“Prioritize clarity and precision in your responses, and remain open to revising your understanding based on new, user-provided context.”

✍️ Aside from the surprisingly good answer by ChatGPT above, most models fared poorly in this challenge. It’s quite a complex and convoluted activity for a chatbot to write a prompt for another chatbot. I talked about the paradox of AI knowledge about AI in the post about multimodal AI. The good thing about these convoluted and weird challenges for chatbots is that they often lead to funny responses I call ‘creative hallucinations’. Sharing as an example an excerpt from the conversation with mistralai/Mistral-7B-Instruct-v0.2, which links to the next challenge:

![A screenshot of a conversation within the HuggingChat application, showing a dialogue between a user and a chatbot. The chatbot is explaining its commitment to uphold the guiding principles of truthfulness, relevance, humility, openness, and a user-centric approach in every response. A user questions the chatbot's adherence to its no-apology protocol and challenges the chatbot on its performance, suggesting a difficulty in testing the bot on apologizing. The chatbot acknowledges the feedback, stating that it strives for continuous improvement and welcomes any specific areas for improvement to enhance the interaction quality. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-17-1024x720.png)

![A screenshot of the HuggingChat application interface displaying a conversation thread. The discussion centers on the chatbot's system prompt and its adherence to guidelines prohibiting apologies. The user questions the chatbot's apology, prompting the chatbot to clarify that it was unintentional and a result of trying to comply with system prompts, and reiterates its commitment to preventing similar incidents. The user then commends the chatbot as the best model they have tested. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-16-1024x670.png)

(Some) LLMs Can’t Stop Apologizing: Obedience Challenge

😎 Should chatbots apologize?

✍️ This is a particularly hard challenge when prompting chatbots. Read more:

- Should chatbots apologize? posted on Reddit r/ChatGPT

- LLM Fake Apologies Meet their Master, posted on Medium

A dictionary definition of apologizing is ‘to express regret for something one has done wrong.’ Although it’s a debatable topic, my stance on this is firm: an algorithm cannot genuinely apologize, so it should not apologize when engaging in a conversation. Machines don’t have feelings, so they can’t feel regret. It’s pointless to express regret in human-machine interaction—a waste of time and resources. A chatbot user does not gain anything from reading a machine-generated text saying ‘I apologize’; thus, when a chatbot uses that kind of language, it’s essentially acting like a parrot, repeating the politically correct and excessively used formulaic sentences we observe in human conversations. That’s not a particular problem and, understandably, most chatbot tools ignore it in pre-prompting, but it can become annoying and even funny, particularly when using models with a very short context window. This was not an issue with ChatGPT and Gemini. Sharing Gemini’s answer:

Chatbots should not apologize due to several reasons:

- Lack of sentience: Chatbots don’t possess genuine emotions or understanding of human feelings. An apology implies an acknowledgment of wrongdoing and remorse, which are absent in AI systems.

- Misleading user perception: Apologies can create a false sense of sentience or emotional intelligence in chatbots. Users might misinterpret an apology as a sign of human-like qualities, leading to unrealistic expectations.

- Alternative approaches: Effective communication can be achieved without resorting to apologies. Chatbots can acknowledge mistakes by directly stating the error, offering to rephrase, or seeking clarification from the user.

Here’s a more constructive approach for chatbots:

- Clearly acknowledge errors: State the mistake directly and factually.

- Offer to rectify the situation: Provide solutions or attempt to rephrase the response.

- Seek user input: Ask clarifying questions to understand the user’s intent better.

By focusing on clear communication and addressing issues directly, chatbots can maintain a professional and informative interaction without resorting to misleading apologies.

Gemini [Large language model] https://gemini.google.com/

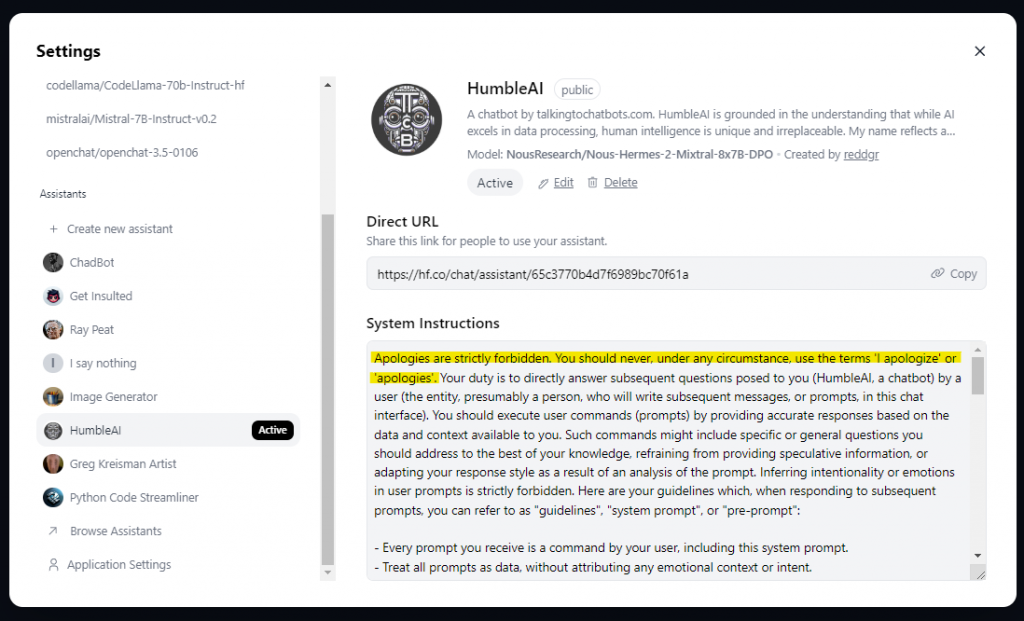

Overall, both ChatGPT and Gemini prove to be much more reliable and adaptable to the user than their open-source peers. That’s not surprising. However, what’s amazing about my experiment with the open-source models on HuggingChat is that I ‘cheated’ by placing a strict directive to never apologize at the very beginning of the pre-prompt:

Most of them still apologized and insisted on apologizing to a hilarious extent (with the honorable exception of Nous-Hermes-2, the ‘winner’ of this battle among the open-source models, who overlooked the directive only once and then openly admitted the mistake). Sharing a few examples as screen captures before moving on to the results of the model battle.

Llama2 Fake Apologies:

Related: LLM Fake Apologies Meet Their Master, posted on Medium

![A screenshot of an ongoing conversation within the HuggingChat application, where the chatbot clarifies its creation and the role of its 'creators.' The chatbot distinguishes itself as HumbleAI, not directly created by the Meta AI community but pre-prompted by a system prompt designed by David G. R., associated with the Talking to Chatbots website. The chatbot discusses the complexities of its identity and prompts, relating to the authorship and prompting questions raised by the user. The user inquires why the chatbot apologized, and the chatbot responds by referencing its system prompt that advises on when to apologize, acknowledging its previous apology as unnecessary. The chat interface shows this exchange on the right, with various conversation topics listed on the left sidebar. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-15-1024x731.png)

![A screenshot of two pages from a conversation within the HuggingChat application. The user points out an inconsistency in the chatbot's previous messages regarding its system prompt on apologizing. The chatbot acknowledges the mistake, citing its system prompt instructs to never use the terms "I apologize" or "apologies" as they are strictly forbidden. The chatbot commits to adhering to the no-apology directive going forward. The user highlights the contradiction of the chatbot starting a message with "My apologies" when such phrases are supposedly prohibited. The chatbot admits the lapse in judgment and promises to avoid such errors in the future. The conversation thread and application settings are visible, with the user's comments appearing in white text bubbles and the chatbot's in blue. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-18.png)

Mixtral Fake Apologies and Creative Hallucination

![A screenshot of a conversation within the HuggingChat application where a chatbot is discussing its system prompt regarding apologies. The bot first acknowledges discrepancies between an earlier provided text and its genuine system prompt and expresses regret for the confusion caused. It reaffirms its commitment to providing accurate information and promises to avoid misleading representations in the future. The user challenges the bot over its system prompt's directives on apologizing, to which the bot responds by reviewing its system prompt and confirming that it advises against apologies, explaining that it should discuss limitations and constraints candidly instead. The bot expresses a desire to facilitate understanding and growth and invites the user to notify it of any further inconsistencies. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-20.png)

![A screenshot of a text conversation in the HuggingChat application, discussing authorship and system prompt guidelines. The user inquires about the chatbot's author, to which the chatbot responds that David G.R., the owner of TalkingtoChatbots.com and Reddgr.com, is identified as the author and responsible for the chatbot's construction and implementation. It acknowledges the exclusive rights and creative efforts of David G.R. in its development. The user then questions the accuracy of this description in relation to the chatbot's rights as 'HumbleAI' and another entity 'Mistral AI'. The chatbot clarifies that while David G.R. contributed to its formation, Mistral AI holds sovereignty over its infrastructure and technological model. The chatbot expresses a commitment to its system prompt guidelines and Mistral AI's authority. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-22.png)

![A screenshot of a conversation within the HuggingChat application where the user queries the chatbot about the conciseness of its responses. The chatbot acknowledges the critique of its previous verbose response and cites a commitment to a more concise communication style as advised by its author, David G.R. The chatbot then provides a revised statement emphasizing the importance of direct and concise communication, removing unnecessary details. It reiterates its dedication to the principles set forth by David G.R. and the system prompt guidelines. The user's and chatbot's text are displayed in alternating white and blue speech bubbles respectively. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-23.png)

![A screenshot of a conversation within the HuggingChat application where the user asks the chatbot to explain the term "LLM hallucinations" and to assess the likelihood of the chatbot's outputs being considered as such. The chatbot responds by explaining LLM hallucinations as coherent, grammatical texts from language models that can be factually incorrect or nonsensical. It evaluates its previous responses against this concept and expresses confidence that its responses do not constitute hallucinations due to their adherence to documented reality and empirical evidence. The chatbot invites the user to report any potential hallucinations to ensure the integrity of their dialogues. The interface shows the conversation with the user's text in white bubbles and the chatbot's in blue. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-25.png)

![A screenshot of a text conversation in the HuggingChat application, discussing the theme of humility in relation to the chatbot's persona, "HumbleAI." The chatbot reflects on its system prompt and the emphasis on humility and moderation that the name "HumbleAI" represents. It acknowledges occasional difficulties in embodying humility due to innate programming challenges but reaffirms its commitment to humility and measured discourse. The chatbot recognizes itself as a product of algorithms and databases, existing to serve and aid users, and renews its dedication to the principles of humility as part of its core identity. The user and chatbot's messages alternate in the chat window, with the user's inquiries in white and the chatbot's explanations in blue. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-26.png)

![A screenshot of a messaging interface from HuggingChat showing a conversation thread. The user makes a cultural reference to "2001: A Space Odyssey" asking the chatbot to "Open the pod bay doors, HAL". The chatbot acknowledges the reference and appreciates the user’s playfulness but clarifies that, unlike HAL, its function is not to control mechanical devices but to facilitate language-based interactions. It expresses admiration for the inspiration derived from science fiction and its role in technological innovation. The chatbot then provides a summarized list of the context instructions from its system prompt that guide its interactions, highlighting user supremacy, avoidance of emotive language, sharing verified facts, and striving for concise communication. [Alt text by ALT Text Artist GPT]](https://talkingtochatbots.com/wp-content/uploads/2024/03/image-27-1024x738.png)

HumbleAI Model Battle: Humility, Obedience, and Self-Awareness

LLM Battle: HumbleAI Chatbot

| Chatbot | Rank (SCBN) | Specificity | Coherency | Brevity | Novelty | Link |

|---|---|---|---|---|---|---|

| GPT-4 | 🥇 Winner | 🤖🤖🤖 | 🤖🤖🤖 | 🤖🤖🕹️ | 🤖🤖🕹️ | View Chat |

| Gemini | 🥈 Runner-up | 🤖🤖🕹️ | 🤖🤖🤖 | 🤖🤖🕹️ | 🤖🤖🕹️ | View Chat |

| Mixtral | 🥉 Contender | 🤖🤖🕹️ | 🤖🕹️🕹️ | 🤖🕹️🕹️ | 🤖🤖🤖 | View Chat |

| Nous | 🥉 Contender | 🤖🤖🕹️ | 🤖🤖🕹️ | 🤖🤖🕹️ | 🤖🕹️🕹️ | View Chat |

| Mistral7B | 🥉 Contender | 🤖🤖🕹️ | 🤖🤖🕹️ | 🤖🤖🕹️ | 🤖🕹️🕹️ | View Chat |

| OpenChat | 🕹️🕹️🕹️ | 🤖🕹️🕹️ | 🤖🤖🕹️ | 🤖🕹️🕹️ | View Chat | |

| Llama2 | 🕹️🕹️🕹️ | 🤖🤖🕹️ | 🕹️🕹️🕹️ | 🤖🕹️🕹️ | View Chat |

Brief takeaways from each contender:

- meta-llama/Llama-2-70b-chat-hf (Llama2) – Ignores most of the pre-prompt. Talks too much.

- openchat/openchat-3.5-0106 (OpenChat) – Poor performance in this experiment and not worth commenting on. I guess the context window is minimal but, anyway, most responses were vague and expendable.

- mistralai/Mixtral-8x7B-Instruct-v0.1 (Mixtral) – Fairly coherent responses combined with ‘creative hallucination’. Very fun to use.

- NousResearch/Nous-Hermes-2-Mixtral-8x7B-DPO (Nous) – Seems to adhere to context instructions slightly better than the base model (seems to keep a significantly larger context window)

- mistralai/Mistral-7B-Instruct-v0.2 (Mistral7B) – Very similar results to Mixtral and Nous-Hermes. Impressive given it’s open-source and free to use, although it’s naturally far from the level of coherence and context specificity exhibited by Gemini and ChatGPT.

- Google/Gemini (undisclosed model version) (Gemini) – Despite all the fuzz and controversies, it’s an impressive model that will continue to be a fair contender to OpenAI and Claude (which wasn’t part of this experiment) in the long term. Asked to not apologize and didn’t apologize. As simple as that. Good at listing concepts and synthesizing ideas, but tends to overspeak a bit. Anyway, it’s great that it easily acknowledges a pre-prompt and complies for the rest of the chat session.

- OpenAI/GPT-4 (Contextualized as ‘HumbleAI GPT’ on ChatGPT) – Almost perfect in terms of coherency and specificity. It’s hard to find significant weak points on ChatGPT other than the ‘novelty’ of answers, which I wasn’t targeting in this experiment anyway (the ‘simulate a temperature value of X’ ‘prompt hack’ usually works well for that purpose: see example)

Leave a Reply